At Google’s London Summit last week, developer news included three new AI-related features in Firebase Studio, a browser-based IDE for the Firebase platform, including autonomous agent mode, support for Model Context Protocol (MCP – see The Register‘s explainer here), and support for the Gemini command line interface. Jeanine Banks, VP and general manager of Developer X at Google, introduced these advances during the keynote, explaining “Firebase Studio is our AI workspace that allows you to build full stack AI applications and go from prototype to scale so much faster.”

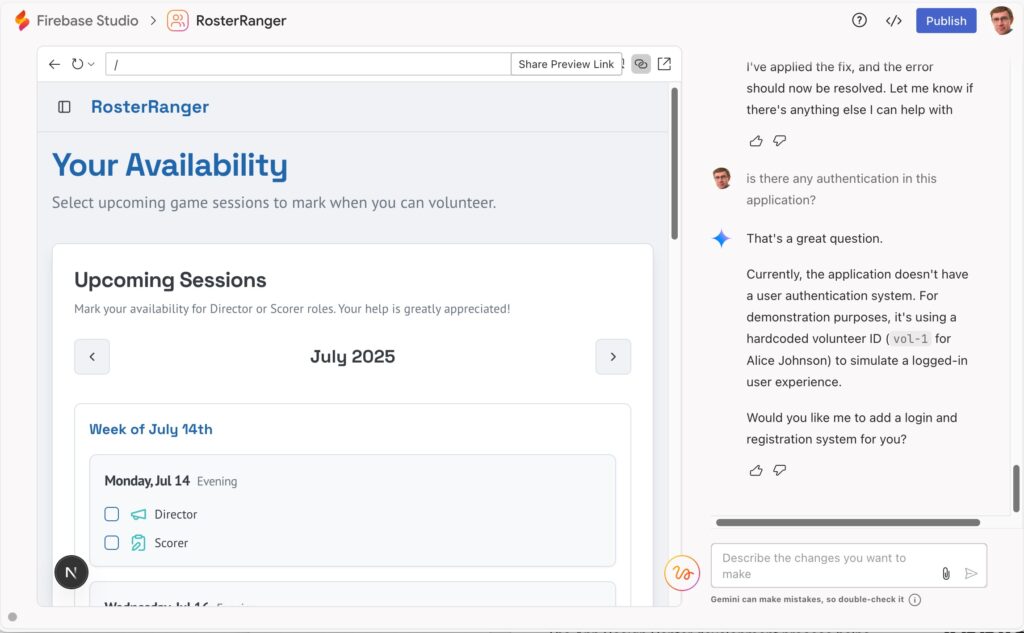

Firebase Studio includes a prototyper that builds a complete application from a prompt. Users (who might not be developers) can iterate on the initial output either in a wizard view, which hides the code, or in a code editor based on the ubiquitous Code OSS, the open-source component also used by Visual Studio Code.

Is this AI-guided development just for prototyping, as the name implies, or is it part of a new generation of what is sometimes called citizen development, the idea that non-developers can create business applications in no-code or low-code IDEs?

Banks told DevClass that Firebase Studio is aimed at several types of developer. “One is the citizen developer. It’s using our app prototype agent which is a full vibe coding experience. You could do previews. You can publish seamlessly in one click.

“The next type of developer is a professional who … previews changes before they’re applied and can assess if it’s right or wrong.”

Citizen development sometimes results in code that’s low quality, unmaintainable, and insecure. Can Firebase Studio and Gemini mitigate this – or are the prototypes meant to be just prototypes, that are handed over to a developer team to implement?

“Our belief is that in order to get to production-grade applications, you need professional engineering,” Banks told us. “You might have citizen developers working in one view, bringing ideas to life, and the special engineering team who’s working in the code editor for the most part … you actually have a platform that lets both people do what they do well to get to that production-grade outcome.”

This touches on a key issue: vibe coding is capable of immediately impressive results even in the hands of non-developers, but can be a disaster if put into production without oversight. Even the originator of the term, Andrej Karpathy, said that it was “not too bad for throwaway projects.” Is there a way of harnessing the power of these tools, of which the Firebase Studio prototyper is an example, in a manner that works for the enterprise and if so, what are the best practices?

“Let me tell you what we do at Google,” Banks said. “Our employees have permission to use Firebase Studio to build applications, but if you want to share the application with other Googlers or outside of Google, you can only use approved, validated cloud projects.”

A Google Cloud Project is the “organizing entity for what you’re building,” the docs state, including settings, permissions, and a link to a billing account. In Firebase Studio, “you build applications that run in the context of a GCP project,” said Banks.

“We have an internal chat room, and internal Googlers come into our chat room, they say, hey I can’t deploy my application I built in Firebase Studio, it’s giving me this message that I don’t have an approved billing project. We’re like, yes, your organization, based on its policies, has decided they don’t want you deploying just any application. You have to go through them and their security reviews before they will approve,” she added.

There is perhaps some mixed messaging here from Google and other vendors. In the keynote at the London Summit, Ulrike Gupta, director customer engineering, said that thanks to advancements in AI organizations can go “from ideation to something in production much faster.” While this may be true, the best practices around code and security reviews tend to be glossed over, and in the case of small organizations, they might not be any suitable team of engineers and security experts to perform the necessary reviews.

Banks referred us to various safeguards within the platform that help to mitigate risk, including a new AI testing agent which is in private preview. “Google Cloud provides a lot of power and security tooling,” said, and AI assistance can also give security guidance, but she added that “we don’t take responsibility for the security posture of user’s applications … in the terms of service, they’re responsible.”

Why was this topic, of security and AI development best practice, not mentioned in the keynote, as it is top of mind for many organizations?

“That’s good feedback because it’s something we talk about very much,” said Banks. “Risk areas are definitely areas of research and development for us and something I agree we should talk about more often, and we do. It’s just that in this particular keynote we didn’t do that.”