Developer Tim Davis, a professor of Computer Science and Engineering at Texas A&M University, has claimed on Twitter that GitHub Copilot, an AI-based programming assistant, “emits large chunks of my copyrighted code, with no attribution, no LGPC license.”

Not so, says Alex Graveley, principal engineer at GitHub and the inventor of Copilot, who responded that “the code in question is different from the example given. Similar, but different.” That said, he added, “it’s really a tough problem. Scalable solutions welcome.”

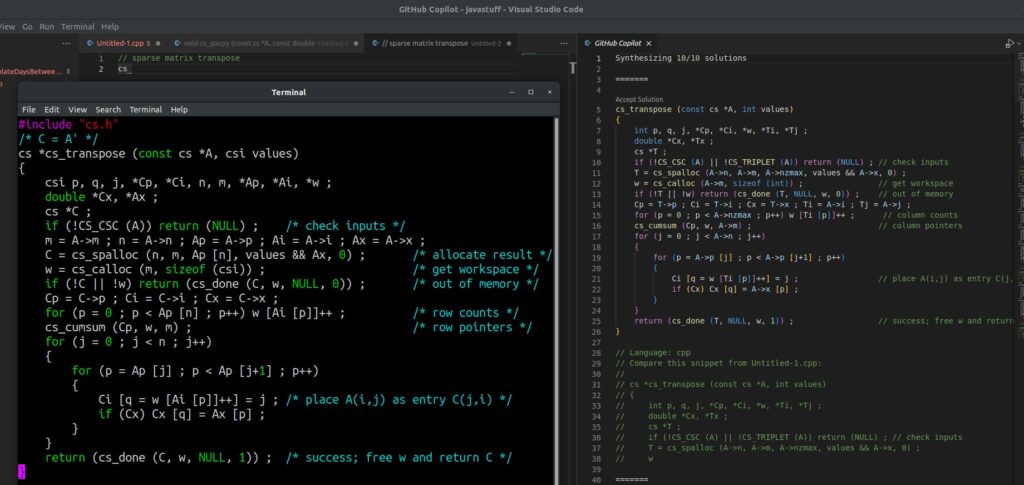

The code Davis posted does seem very close. He typed the comment:

//sparse matrix transpose

in a C++ file and Copilot’s top suggestion, generated in response, has not only code but also comments that closely match his own previous code, such as:

“/* place A(I,j) as entry C(j,i) */

Davis also eliminated the possibility that Copilot was only offering the code because he wrote it. Another developer using Copilot was able to get similar results.

It is important to note that the original code in question is itself open source and on GitHub under the LGPL 2.1 license. Open source does not mean non-copyright though, and there are many different open source licenses, each of which conveys different permissions. One of the concerns in the open source community is that if chunks of open source code are regurgitated wholesale, without specifying any license, then it is breaking the purpose of the license. Another concern is that developers may inadvertently combine code with incompatible licenses into one project.

“Illegal source code laundering, automated by GitHub,” claimed Jeremy Soller, principal engineer at System 76, home of the pop!_os Linux distribution. “You can literally find a piece of code you want, under an incompatible license, then keep probing copilot until it reproduces it. With plausible deniability later (copilot _gave_ me that code)!” he added.

Copilot anticipates this problem to some extent. There is a public code filter described here which claims to “detects code suggestions matching public code on GitHub … when the filter is enabled, GitHub Copilot checks code suggestions with their surrounding code of about 150 characters against public code on GitHub. If there is a match or near match, the suggestion will not be shown to you.”

However, Davis said that “when I signed up, I disabled the ‘Allow Github to use my code..’ option. Also note that ‘suggestions matching public code’ is ‘blocked’. Same result.”

Part of the problem is that open source code, by design, is likely to appear in multiple projects by different people, so it will end up multiple times on GitHub and among multiple users of Copilot. With or without Copilot, developers can make wrongful use of copyright code.

Graveley is defensive, talking yesterday about “Copilot fear mongering thus far: – steals code …”

The Copilot FAQ is not altogether clear on the subject. It states: “The code you write with GitHub Copilot’s help belongs to you, and you are responsible for it,” and also that “the vast majority of the code that GitHub Copilot suggests has never been seen before.”

However it also says that “about 1% of the time, a suggestion may contain some code snippets longer than ~150 characters that matches the training set.” Another FAQ entry states that “You should take the same precautions as you would with any code you write that uses material you did not independently originate. These include rigorous testing, IP scanning, and checking for security vulnerabilities.”

Developers may wonder: is this AI that generates code, or AI that searches the web for open source code that might be suitable?