Intel is transitioning its developer tools to LLVM for cross-architecture support and to a specification called oneAPI for accelerated computing, in an effort to reduce the dominance of Nvidia’s proprietary CUDA language.

“Accelerated computing, for it to become pervasive, it needs to be standards-based, scaleable and multi-vendor and ideally multi-architecture. We set out four years ago to do that with oneAPI … and we’ve got to the point where we’re becoming productive for developers. The standardized library interfaces have all been proven out as well. It’s the right thing for the industry … folks who are having to embed codes and have them live for a long time inside an embedded system can’t live with a single-vendor code,” Intel’s Joe Curley, VP and General Manager for software products told DevClass.

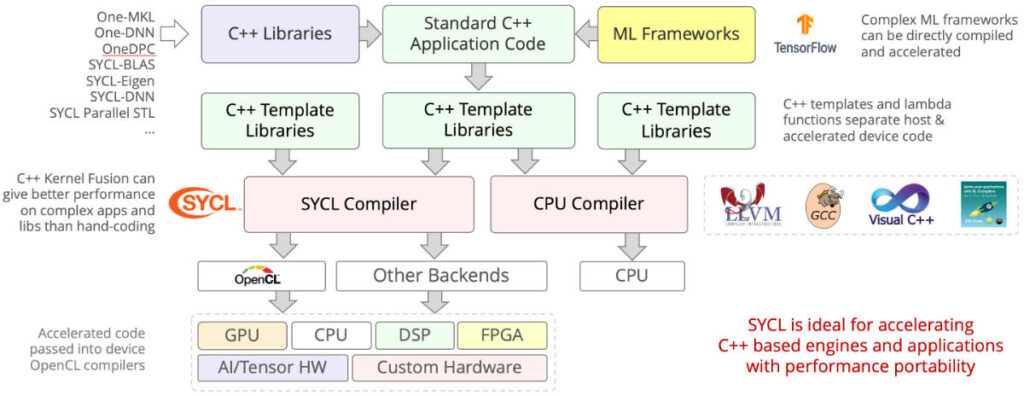

Intel’s System Studio, a collection of compilers and libraries, has been replaced by a product called Intel oneAPI Toolkit. The oneAPI Specification covers 10 core elements, many of which will be familiar to developers who have used Intel libraries, such as oneAPI Threading Building Blocks and oneAPI Math Kernel Library. The heart of it though is oneAPI Data Parallel C++ which is based on SYCL (pronounced sickle and not an acronym), a specification for a C++ programming model for accelerated computing published by Khronos Group. There is also an open source SYCLomatic tool which will convert code from CUDA to SYCL.

Intel used to back OpenCL as the standards-based alternative to Nvidia’s CUDA, which only runs on Nvidia GPUs. Is OpenCL dead?

“I don’t think it’s dead, but the issue with OpenCL was that it never really ramped. You talk to developers, they found some of the header files and other things to be cumbersome, it wasn’t a highly productive language, [though] it was scaleable. We thought that Intel did a very good OpenCL implementation but a lot of other vendors didn’t and never got the performance out of OpenCL that people expect … if you dig into the oneAPI Toolbox we actually have an OpenCL path to run on our hardware if that’s how you’ve chosen to program. But what people like about SYCL is it’s a single code page instead of host and device code. It’s standard C++, it’s not a specialized language,” Curley told us.

Another thing that will catch the eye of developers is that “All Intel OneAPI toolkits are offered to developers free of charge. The free products come with community support. To get priority support, you will need to purchase the commercial products,” according to the documentation.

These are specialized tools. Developers working in high-level languages like Python, JavaScript or Java do not need to be aware of them, though they may be used under the covers. “The people that write the runtimes, the frameworks, they’re on our tools,” said Curley. Another key group of users is HPC (High Performance Computing) developers. AI is compute-intensive which has also increased the importance of fully exploiting the performance potential of CPUs and GPUs.

Should we not expect that just as CUDA is for Nvidia hardware, Intel’s tools will work best with Intel hardware? There is some truth in that, but Curley insists that “oneAPI is a very simple concept. It’s building a scalable software stack that provides standardized language and library interfaces for accelerated and parallel computing. While we’ve proposed this, it’s not exclusive to Intel. Fujitsu, with its Arm-based A64FX processor, broke the global ML (machine learning) performance record a couple of years ago, using oneAPI.”

Curley added that “oneAPI has been proven and demonstrated on Nvidia AMD, Intel GPUs, AMD, Intel, ARM CPUs, and even though performance varies, on multiple FPGA brands.”

Intel compilers are transitioning to use the LLVM infrastructure in order to support multiple architectures.

SYCL is based on C++ but for safety reasons there is increasing interest in Rust, Go, and other languages with better protection against insecure coding. Curley though told Dev Class that developers are still predominantly choosing C++. “C++ has actually been growing these last couple of years, and part of that is because it actually is really good for people who have needs for performance,” he said. “There’s a whole bunch of work in the C++ standards body around safety … safety is its own topic. What language you choose, what layer of abstraction you want, how to structure your code, that’s ends up being the developer’s choice.”

Intel compilers are transitioning to use the LLVM compiler infrastructure. “The Intel Fortran compiler and the Intel C compiler are pretty well adopted, but now they lower into a standards-based stack through an LLVM compilation chain to get at the accelerators below,” he told us.

A notable SYCL project is SYCLOPS, formed earlier this year by a group of 8 European organizations to accelerate AI via RISC-V hardware along with SYCL. “Unfortunately, all popular AI accelerators today use proprietary hardware-software stacks,” said the project’s launch release, with SYCLOPS being “an effort to break this monopoly.”

Many developers are pragmatists though and Intel with its allies have a tough challenge. “Even if you were wanting to move away from CUDA, the churn of the alternative models would be scary. Do you want to develop for CUDA which has been stable and developed for quite some time, or do you want to try a new standard that you don’t know if it will be obsolete in a couple of years?” remarked one sceptical developer a couple of years ago.

Developers keen to try oneAPI and SYCL can download the tools, or head to Intel’s Developer Cloud which enables programming on a variety of hardware using oneAPI without needing to make any purchases.