Cody, an AI coding assistant from Sourcegraph, now supports Anthropic Claude 3 LLMs (Large Language Models) as well as OpenAI GPT-4 and Azure OpenAI.

According to Sourcegraph, a company specializing in code search and intelligence, “all Claude 3 models show better performance in code generation.” Another feature of the Claude 3 models is that they have a larger token context window, this being the maximum size of the input to the LLM. Anthropic said that Claude 3 models “initially offer a 200K context window,” but that the underlying capability supports inputs “exceeding 1 million tokens.”

This makes the maximum token input of Cody, which is “roughly 7K tokens,” look limiting. Sourcegraph says that Cody’s limit is because a larger code context might harm response quality, but the company believes that Claude 3 is able to process large contexts successfully and states that “we’ll be dramatically expanding Cody’s context window in the coming weeks.”

OpenAI’s gpt-4 has a maximum context window of 128K tokens.

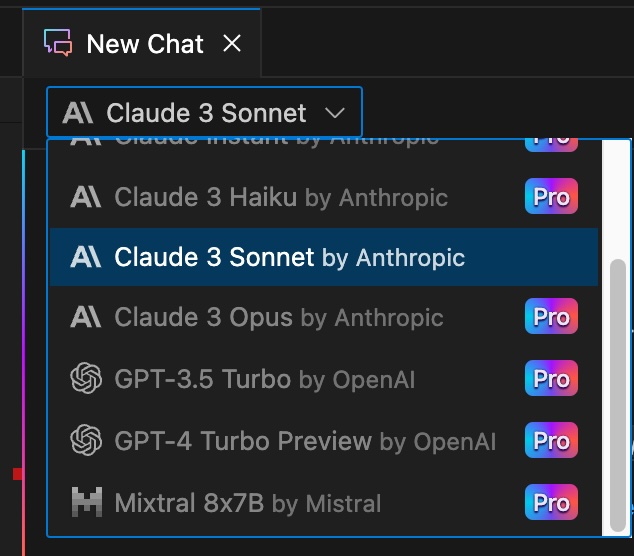

The company claims that after an early trial “of Cody Pro users who primarily used GPT-4, over 55 percent switched their preference to the new Claude 3 models in the month following launch.” Users on Cody’s free plan, which is limited to 500 autocompletions and 20 messages and commands per month, now use Claude 3 Sonnet by default. Sonnet sits in the middle of three Claude 3 LLMs, with Opus being the premium model and Haiku the cheapest and most basic.

Cody supports VS Code, Neovim, and the JetBrains family of IDEs including IntelliJ IDEA and Android Studio – though only the VS Code extension is designated stable. The AI assistant has features including coding chat, documenting and explaining code, finding code smells (code that looks flawed or sub-optimal), and generating unit tests. There is also a code completion feature which can autocomplete single lines or whole functions. Programming languages supported include all the most commonly used, though more niche languages may have lower quality responses.

Code is sent to the LLM for analysis though the FAQ states that “our third-party Language Model (LLM) providers do not train on your specific codebase.”

GitHub’s Copilot is the best-known AI coding assistant, but there are now many other options, including Google’s Gemini Code Assist, AWS Code Whisperer, Codeium, JetBrains AI Assistant, Tabnine, and more. Cody has two notable features. One is that the core of it is open source on GitHub under the Apache 2 license – though in June 2023 Sourcegraph relicensed much of its code, not including the community edition of Cody, to an enterprise license instead of Apache, stating that “our licensing principle remains to charge companies while making tools for individual devs open source,” but casting doubt on the company’s commitment to open source.

Another key feature is that the LLM used by Cody is pluggable, with the enterprise edition allowing customers to bring their own license – a attractive capability for developers unsure which will be most productive.