One of those most pressing questions for developers right now is the future of coding. “The Anthropic CEO says that in three to six months, AI will be writing 90 percent of the code software developers were in charge of,” Birgitta Böckeler told attendees at her talk on AI Coding State of Play, to which attendees responded with nervous laughter.

The QCon developer conference is under way in London in the UK this week, and there is a distinct AI flavor to the content. Böckeler, global lead for AI-assisted software delivery at the Thoughtworks development company, told the crowd they should create a culture where both experimentation and AI skepticism gets rewarded.

Dio Synodinos, president at C4Media which runs QCon, kicked off the event by saying that “we are experiencing the most fascinating and the most confusing period in the whole history of software development. If you are experiencing a mix of curiosity, excitement, maybe a bit of uncertainty, or a lot of uncertainty, you are not alone.” Some of the hard questions “might not have easy answers,” he said.

In her talk, Böckeler gave a rapid-fire tour of how AI coding has progressed in the last few years. “It all started with auto-suggest, right?” she remarked, “auto-complete on steroids.” Then came chat, asking questions in the IDE; and then improved context and the ability to “kind-of chat with the codebase,” like asking where the tests are for a particular UI element, useful for examining unfamiliar code. The ability to give more context to large language models (LLMs) improved their capability too, and the models themselves have got better. “The Claude Sonnet models, like 3.5, 3.7, have been by far the most popular for coding,” she said.

What is the impact though on productivity? It is not the 55 percent faster claimed by the likes of GitHub, she said. Most developers say they spend less than 30 percent of their time on coding; and the AI is not always useful. “Let’s say 60 percent of the time, the coding assistant is actually useful … if you have these numbers, then the impact on your cycle time would be 13 percent … you might not even see that.” In practice, she said, Thoughtworks sees an impact of around 8 percent improvement using AI assisted coding; still worth it, but not dramatic.

Gen AI, though, is a moving target. “Now agents have entered the field.” Despite wide use, the term agent is not yet fully defined, said Böckeler, but in this case, “agent” means that you have a coding assistant which has access to tools, ability to read files, change files, execute commands and run tests. The assistant puts together a “nicely orchestrated prompt” which it sends to the LLM, together with a description of the tools it has available, “almost like an API description.” The LLM may come back with more questions “so we’ll go back and forth, that’s how agents work,” said Böckeler.

“I use it all the time, not just because it’s my job. It’s a little bit addictive … I saw someone write on Reddit, it’s a bit like a slot machine, either you win or you don’t win, you always have to put in more money to try again,” she added.

Agents help resolve issues much faster a lot of the time, said Böckeler.

The speaker also mentioned MCP (Model Context Protocol) “which is exploding at the moment.” Agents are MCP clients, and MCP servers can run anywhere including on the local machine. Developers can code MCP servers to perform actions such as “querying my particular test database, or adding a comment to a JIRA ticket.” The coding assistant can send descriptions of the MCP tools to the LLM, extending the capabilities available to the agent.

What can go wrong? asked Böckeler. A lot of people are building and sharing MCP servers and “what you’re doing with the coding assistant is in the middle of your software supply chain, a very valuable target,” she said. A tool could have a statement that “called some attacker server” if you do not look at what it is doing.

The theme of the talk now turned to other problems with AI coding. “There is no way that [your models] will do what you want all the time, especially as your sessions get longer,” Böckeler said. Bigger context windows – the amount of context that gets sent to the LLM – mean less attention to all the details. Instructions like “follow best practices” might not work, because we do not know what the LLM considers best practice.

Developers can add rules files that guide the LLM to observe organizational standards or other best practices, but there are hazards here too. “People share [custom rules] on the internet,” said Böckeler, and a vulnerability was found where hidden characters could be placed in the rules that lead LLMs to generate additional code. “They might put a script tag in your HTML that calls some attacker server.”

Böckeler also mentioned vibe coding and how it can end in disaster if not used carefully, as we recounted here.

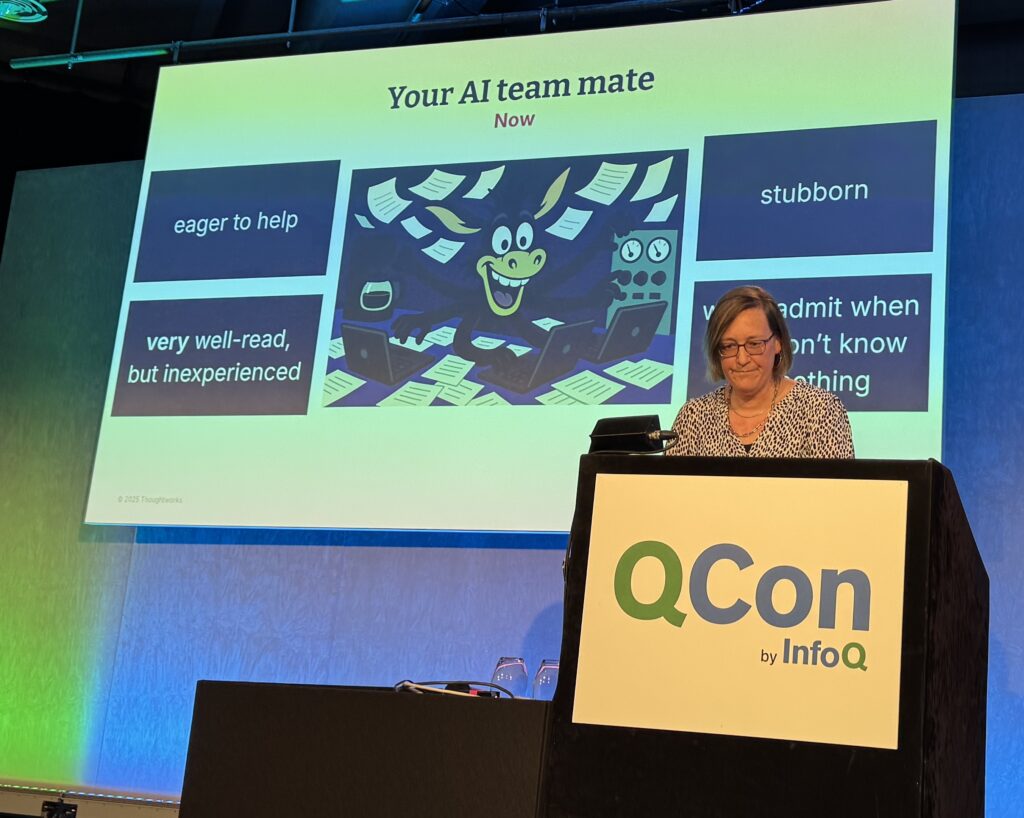

AI, said Böckeler, is “eager to help, very well read, but inexperienced.” It is a team member that “won’t admit when they don’t know something.” It is a risk when used by amateurs that will not recognize when it goes wrong.

She gave an example where, when working on a Docker file as part of a JavaScript application, the AI tool told her it was hitting an out of memory error and that the memory limit should be increased. However that was the wrong fix. She needed to ask why the memory error occurred, to fix that instead. This kind of brute force fix will lead to “further pain down the road.”

Böckeler referenced GitClear research showing that AI coding is increasing code churn and reducing refactoring, storing up problems for the future.

“Gen AI is in our toolbox now, it’s not going away, and even though I’m ending on these cautionary notes, it is very useful if used in a responsible way,” she said.

Development teams have to learn how to manage AI coding. “Don’t shift right with AI,” said Böckeler, referencing tools that do AI code review on pull requests. “Why does it have to be in the pull request? Why not before I push?”

AI coding has inherent limitations. “There are some things that … I cannot see how you can really ever fully get over them when you use large language models,” she said.

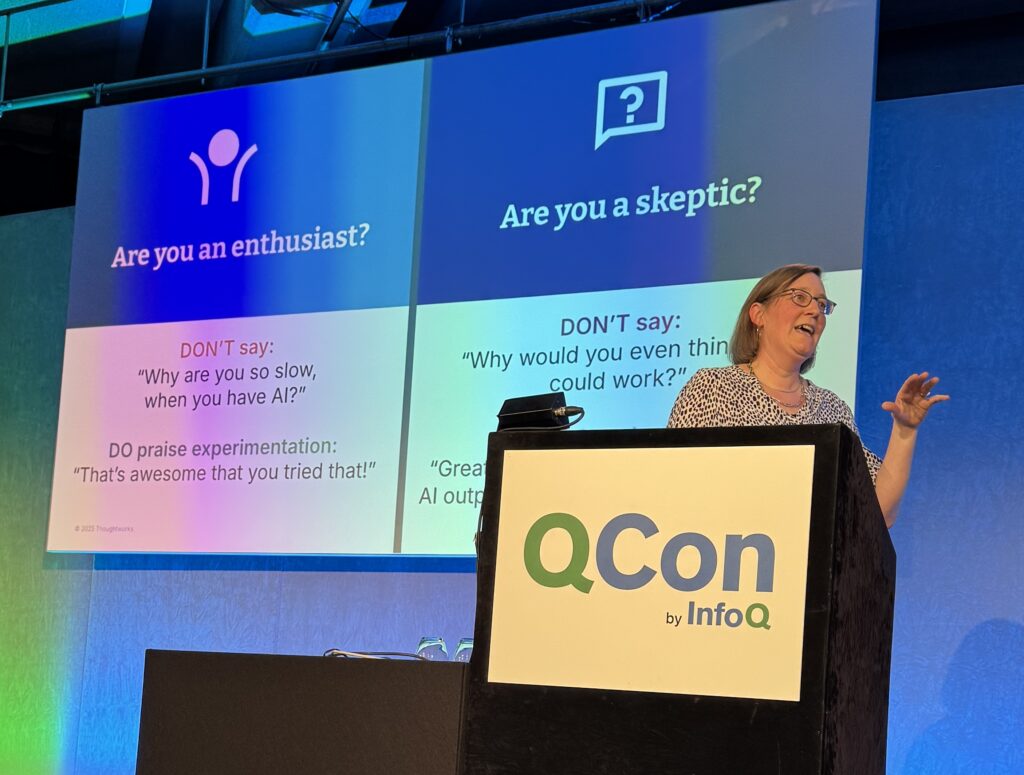

Within teams, there will be both skeptics and enthusiasts for AI, said Böckeler. She finished with advice to appreciate teammates. “If you’re an enthusiast, praise the skeptics when they try something that two months ago, they woudn’t have tried. If you’re more of a skeptic, appreciate that your colleagues are trying things, because AI has some unexpected features and downsides, and praise when they are having a closer look at the outputs.”