JetBrains has updated its AI Assistant, currently in preview, including a new way to restrict its use for organizations keen to ensure that their code is not shared with an LLM (Large Language Model) provider.

“When we first announced the AI Assistant, many customers stated that they would need to restrict its use, due to company policies that do not allow source code to be shared with third parties,” explained Dmitry Jemerov, IntelliJ ML team lead at JetBrains. There is now support for a .noai file, created in the root directory of a project, which when present means that “all AI Assistant features will be fully disabled in the project.”

Jeremov further promised that “In the future, we’ll continue looking into other ways to provide organizations with control over the use of AI Assistant.”

There are two aspects to the way AI Assistant handles code sharing. First, some code sharing is necessary for the LLM provider to function. “When you use AI features, the IDE needs to send your requests and code to the LLM provider. In addition to the prompts you type, the IDE may send additional details, such as pieces of your code, file types, frameworks used, and any other information that may be necessary for providing context to the LLM,” states the documentation. Currently, the only LLM provider is OpenAI using the GPT-4 or GPT-3.5 models, but this may change in future.

Second, there is optional telemetry for product improvement, which does include “the full communication between you and the LLM (both text and code fragments)” and which is used by JetBrains, but not shared with third-parties or used for model training. This type of data sharing is only enabled in preview builds of the JetBrains IDEs, can be disabled in settings, and is retained for a maximum of one year. Understanding what is being generated and how often it is accepted by developers is important for developing the product.

JetBrains does not state how the LLM provider handles code shared, but only that it will be “processed according to their data collection and use policy.” OpenAI, for example, states that “Your API inputs and outputs do not become part of the training data unless you explicitly opt in.” Commenting on Hacker News, Jeremov said that, compared to GitHub Copilot, “there’s no difference in terms of how sharing code is handled. Both Copilot and AI Assistant send your code to a[n] LLM, and neither of those tools will use your code for training code generation models.”

Further updates to AI Assistant include the ability to define custom prompts for sending to the AI chat, including limited context, and an “explain runtime error” feature for Java and Python.

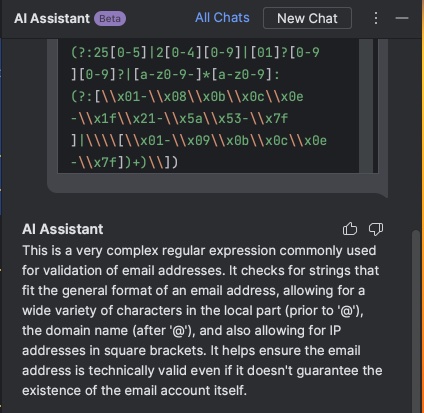

Another feature is “Explain RegExp/SQL/Cron”, all languages that can be perplexing especially to newcomers. Regular Expressions are used to identify whether text matches a pattern, such as an email address; SQL (Structured Query Language) is used to query and update databases; and Cron is used to schedule jobs on Unix-like operating systems.

We tried the Explain RegExp feature, which curiously gave us slightly different answers when repeated with the same expression, though both were accurate. Sometimes it will explain the expression blow by blow, and sometimes summarise it.

AI Assistant remains in limited preview with a waitlist, and pricing once the service becomes generally available is not yet announced.