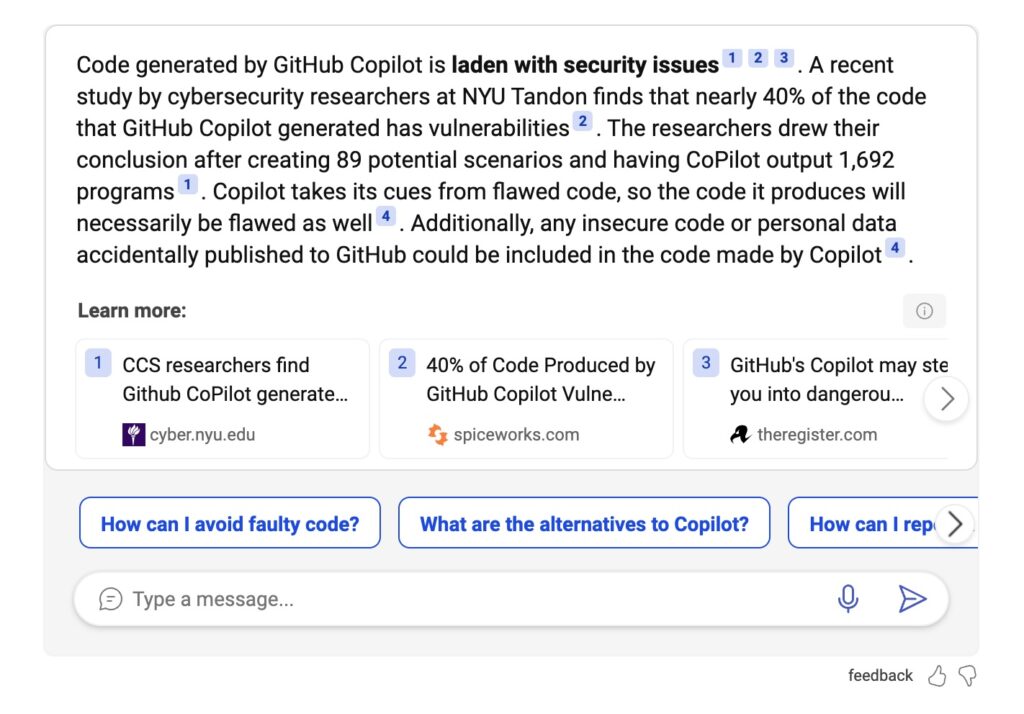

A new report suggests that AI coding tools “consistently generate insecure code,” highlighting an aspect of automated coding that may be neglected.

AI generated code continues to make waves, increasing its scope from assistance with snippets of code, to writing entire applications. However, security vendor Snyk claims that AI code completion “continues to inject security risks into the development process,” and that “developers are actively bypassing AI usage policies.”

The claims are based on a survey of 537 IT managers and developers from the USA, UK and Canada, somewhat tilted towards smaller companies, with nearly half working at businesses with 500 employees or fewer. AI coding tools included ChatGPT (70.3 percent), Amazon CodeWhisperer (47.4 percent), GitHub CoPilot (43.7 percent) and others.

According to the survey, three quarters of developers said that “AI code is more secure than human code.” Given that humans are prone to make mistakes, that might not seem surprising, but the Snyk researchers instance this as an example of “a deep cognitive bias” that is dangerous for application security.

More than 70 percent of respondents feel that AI code suggestions are making them more productive, which might account for that fact that over half of those surveyed are happy to bypass security policies in order to use them. Almost 55 percent do this all or most of the time. Further, if developers accept insecure code generated by AI, that could increase the likelihood of similarly insecure code being suggested to others.

In those companies which do attempt to restrict the use of AI coding tools, security concerns is the biggest reason, followed by data privacy, quality and cost.

Snyk references a Stanford University study from late last year which looked at “how developers choose to interact with AI code assistants and the ways in which those interactions cause security mistakes,” though the study was limited to university students. There are some shocks here, including the willingness of the AI assistant to generate SQL “that built the query string via string concatenation,” a sure route to SQL injection vulnerabilities. A professional developer though should reject this; and the implication is that the security of AI-assisted coding depends not just on the developer, nor on the AI assistant, but on the interaction between them.

The problem is similar in some ways to that of copying code from examples or from Stack Overflow; such code might not be secure, because it is demonstrating how to solve a problem rather than offering something production-ready. In the Stanford study, we read that “15.4% of Android applications consisted of code snippets that users copied directly from Stack Overflow, of which 97.9% had vulnerabilities,” this coming from a 2017 paper “Stack Overflow considered harmful.” The trade-off between productivity and security may be similar today, among careless users of AI code generation.

A key problem is that human intuition seems inclined to trust AI engines more than their known limitations merit. It is known that generative AI is not reliable; yet a lifetime of learning that computers are more logical than humans is hard to overcome.

The better news is that the most skilled practitioners suffer fewer of these problems. “We found that participants who invested more in the creation of their queries to the AI assistant, such as providing helper functions or adjusting the parameters, were more likely to eventually provide secure solutions,” the Stanford researchers report.

Snyk, being a security vendor, points organizations towards use of vulnerability scanning tools and its “DeepCode AI” tool, which is also AI-driven, as a solution. The broader picture though is that given that AI-coding tools are here to stay, training in how to use them sensibly is now a critical part of what it takes to be a professional developer.