GitHub code scanning autofix, a service which automatically detects code vulnerabilities and suggests fixes, is now in public preview for those signed up to GitHub Advanced Security.

The service, first introduced at the Universe event in November last year, supports JavaScript, Typescript, Java and Python, with C# and Go coming next, according to the official post.

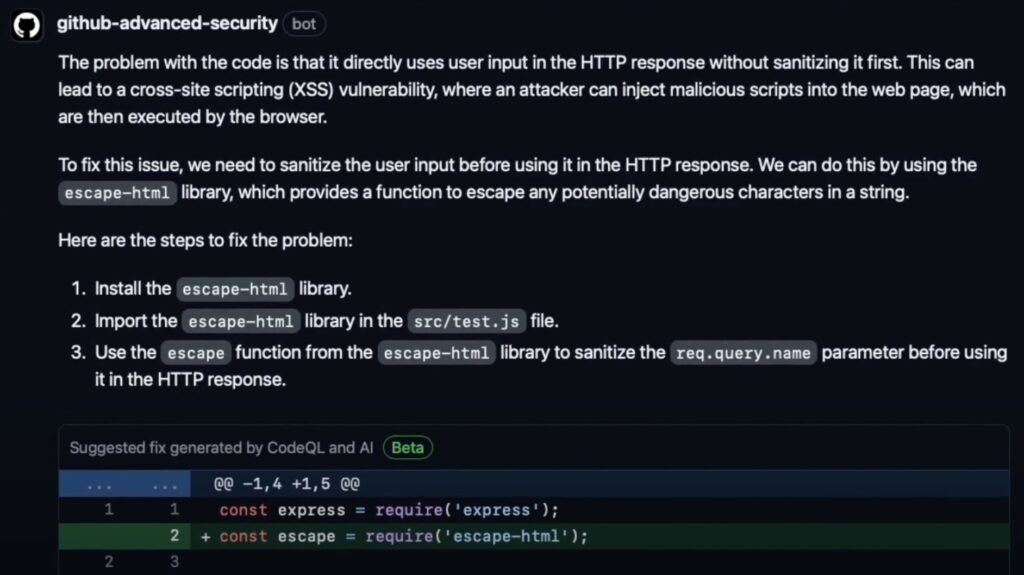

Under the covers there are two services working together. CodeQL is a semantic code analysis engine that lets developers write queries against a database created from a code base. CodeQL queries can identify security vulnerabilities and bugs, and GitHub uses this service to identify insecure code in pull requests.

Once CodeQL has analysed the code, Copilot comes into play, generating code suggestions which are posted to the pull request, where developers can view, accept or modify the proposed fixes and merge them into the code.

The detailed documentation adds that “autofix uses internal GitHub Copilot APIs and private instances of OpenAI large language models (LLMs) such as GPT-4, which have sufficient generative capabilities to produce both suggested fixes in code and explanatory text for those fixes.”

According to GitHub, developers confronted with security alerts often do not know how to fix them. “Developers often have little training in code security,” the docs state, “so fixing these alerts requires substantial effort.”

When alerts are analysed, snippets of code are sent to the LLM for analysis but not used for training purposes.

As is common in AI, GitHub warns that the suggested fixes may not always get it right. There will be a fix suggested for the majority of alerts, we are told, and most of the time they can be committed either without editing or with minor edits, but “a small percentage of suggested fixes will reflect a significant misunderstanding of the codebase or the vulnerability.”

A list of limitations notes that it works best with English language code, that it may generate syntax errors, that the code may be inserted in the wrong location, or that the suggestions may fail to fix the vulnerability or even introduce new ones. There are also potential risks with dependencies, since the AI may suggest changes in dependencies and “does not know which versions of an existing dependency are supported or secure.”

GitHub suggests using continuous integration testing to mitigate these limitations. Although they sound severe when spelt out, if the service mostly gets it right it can still deliver substantial benefit. That said, this is a reminder that skilled oversight remains a requirement for use of AI coding tools.

The new service is only on offer as part of GitHub Advanced Security ($49 per month per committer), which itself is only available as an add-on to an Enterprise plan ($21 per user/month). A question regarding availability to open source users was answered with “we do not plan on shipping it to open source at the moment.”